Introduction

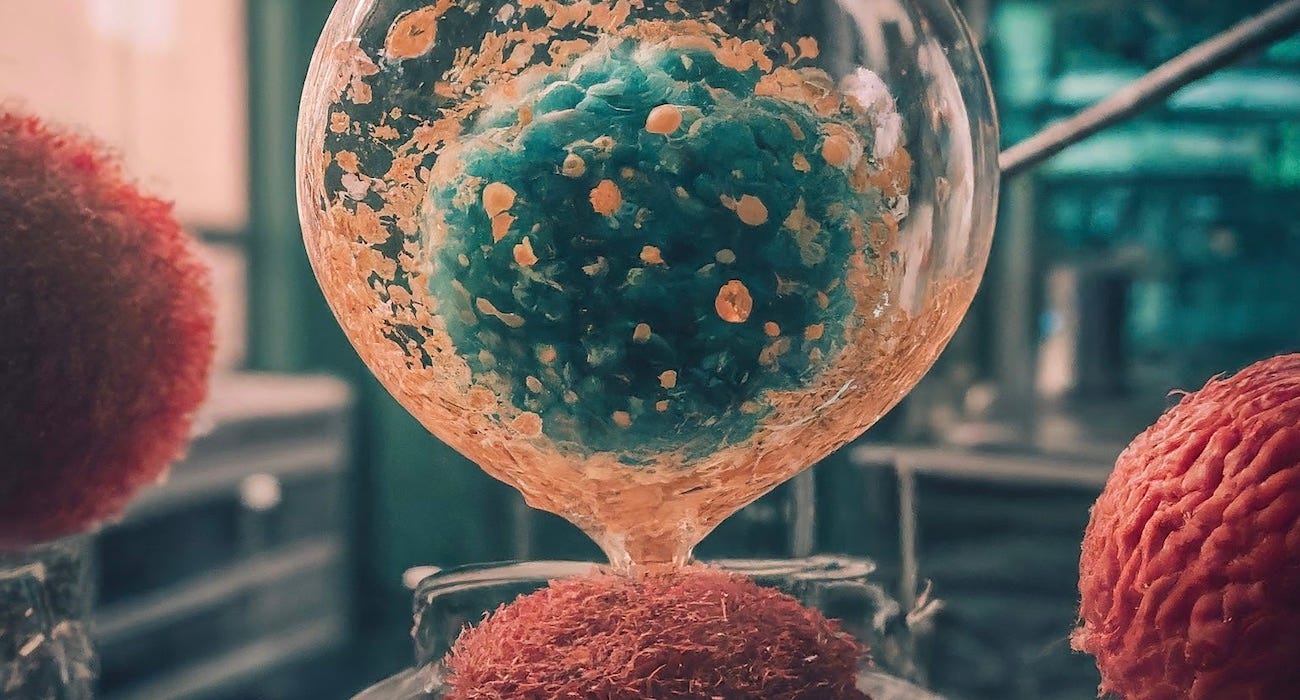

2023 was a year full of remarkable progress in artificial intelligence (AI). And that progress did not solely happen at big companies, nor was it limited to improving models for natural language and chatbots such as ChatGPT, Bard and Pi. We have also seen significant advancements within the field of computational and digital biology. In fact, I’m not the only one who believes this progress will inevitably lead to us simulating entire cells in a profound way for the first time, something we would call virtual cells.

Here’s the thing: we’re creating ever more and diverse biological sequence data. This unlocks a lot of opportunities to look at nature in new ways and to understand and treat diseases better. But at the same time it also makes it increasingly difficult for any individual to see the entire picture of a complex biological phenomenon. Which brings me to this idea: ever more biological data will not only enable us to develop profound AI models to study biology with, it will actually require us to develop such models to continue improving our understanding of biology. As Patrick Schwab argues in his tweet, we want to move from one-off explanations to unbiased and bottom-up understanding. The increasing amount of data that is generated can help us do that, but only if we scale our tools to analyze these vast amounts of data.

So, what is possible with AI in biology today?

The same techniques that underly the popular AI tools such as ChatGPT, Midjourney, Perplexity etc, have also been applied to biological data. At present, most of the research has focused on proteins, the essential building blocks of life. The year 2021 was big, with the release AlphaFold2 and RoseTTAFold; which are two powerful AI systems that are capable of accurately predicting the 3D structure of a protein from its sequence, an important problem in biology. We have also seen more general ‘protein language models’ being developed that are able to efficiently evolve human antibodies (1), can do remote homology detection (2) and that can be used for de novo enzyme design (3). All of these applications are enabling scientists to better understand biological phenomena and solve previously unsolvable problems.

There has also been some research outside of the realm of proteins, at the levels of complete genomes and single cells (4, 5). For example, researchers have applied these state-of-the-art AI techniques to model SARS-CoV2 genomes and predict how these viruses evolve.

More data and compute are all we need?

There has been this central idea in AI R&D that training larger models on more data (with more compute needed) results in models that are more capable. We’ve seen evidence of this in practice as well. But the big question is if this also applies to training models on biological data? Natural language, because of the way people communicate and understand things, contains a lot of hidden, underlying meaning; and thus generative models that essentially predict the next word in a sentence can feel much more capable than that. But we’re not sure that biological data behaves in the same way. Keith Hornberger recently tweeted good arguments about this. The core of the argument is that:

”… Complexity is emergent and new things must be learned at each level of increased complexity. … Possessing a genome does not permit one to reconstruct a cell, let alone an organism. There are too many emergent properties at that level of the system that have to be discovered on their own.”

This may be connected to some very recent results we’ve seen from CalTech and Microsoft Research (6). In their study, researchers trained and evaluated multiple protein language models to predict a variety of things (so-called downstream tasks). They conclude that for many of these tasks performance does not scale with model pretraining and advocate for new ways to pretrain such models.

Which seems to answer our question. No, more data and compute are not all we need. We need something more. But what exactly?

Multimodality and hierarchy

The researchers from the study encourage others to think about new, more profound ways to train protein language models other than the typical way of predicting missing or corrupted amino acids in a sequence.

More generally, we want models that understand biology at the different levels in which biological properties emerge, because biology is inherently hierarchical. How we should exactly tackle this in the best way is still an open question, but there has been some research published on language models at the level of complete genome. One study in particular takes this hierarchical nature explicitly into account by starting from a protein language model and training a second model on top of its outputs that learns from proteins in the context of other proteins (7). It goes on to show that their model learns co-regulation and functional semantics across the genome. Such an approach seems to be promising to uncover complex relationships in genomic regions and is definitely an area that needs further development.

It also seems evident that biological AI models should learn from multiple types of inputs (i.e. multimodality). For example, some parts in a genome are non-coding, but we’ve learned a lot about the importance of these parts over the last years. At the protein level, such pieces of information are not present. Bottom line: we’ll need multiple inputs into these models to simulate cells in a profound way is my hypothesis.

The other things: data and compute

So far we’ve covered the outlook and remaining challenges from the AI side, but to train AI models we need the appropriate data and sufficient compute. This is why adjacent advances in multi-omics technologies and computing hardware are key as well in advancing this whole field.

The Chan Zuckerberg Initiative has recently announced that it is building the largest GPU cluster for non-profit research and aims to simulate human cells on this cluster, betting that “Over the next 20 years, we’re going to see a profound change in how we do science. Many experiments that are expensive and difficult now will be done in silico, by computers.”, says Steve Quake in this Big Think video. These are definitely (part of) the investments we need to keep moving forward.

Conclusion

The big conclusion here is two-sided: on the one hand there is a lot going on and a lot of great progress being made, on the other hand there is so much yet to discover and so many things to further improve.

It is not entirely implausible to forecast that in the coming years, we will be able to simulate diverse biological entities increasingly better in silico, which will also lead us to the ability to design biology from scratch. But innovation doesn’t happen by itself. Which is why we need both talent in this space working on advancing the cutting edge and investments to enable us to tackle big problems.